More

- Topic1/3

20k Popularity

12k Popularity

20k Popularity

7k Popularity

3k Popularity

- Pin

- 🎊 ETH Deposit & Trading Carnival Kicks Off!

Join the Trading Volume & Net Deposit Leaderboards to win from a 20 ETH prize pool

🚀 Climb the ranks and claim your ETH reward: https://www.gate.com/campaigns/site/200

💥 Tiered Prize Pool – Higher total volume unlocks bigger rewards

Learn more: https://www.gate.com/announcements/article/46166

- 📢 ETH Heading for $4800? Have Your Say! Show Off on Gate Square & Win 0.1 ETH!

The next bull market prophet could be you! Want your insights to hit the Square trending list and earn ETH rewards? Now’s your chance!

💰 0.1 ETH to be shared between 5 top Square posts + 5 top X (Twitter) posts by views!

🎮 How to Join – Zero Barriers, ETH Up for Grabs!

1.Join the Hot Topic Debate!

Post in Gate Square or under ETH chart with #ETH Hits 4800# and #ETH# . Share your thoughts on:

Can ETH break $4800?

Why are you bullish on ETH?

What's your ETH holding strategy?

Will ETH lead the next bull run?

Or any o

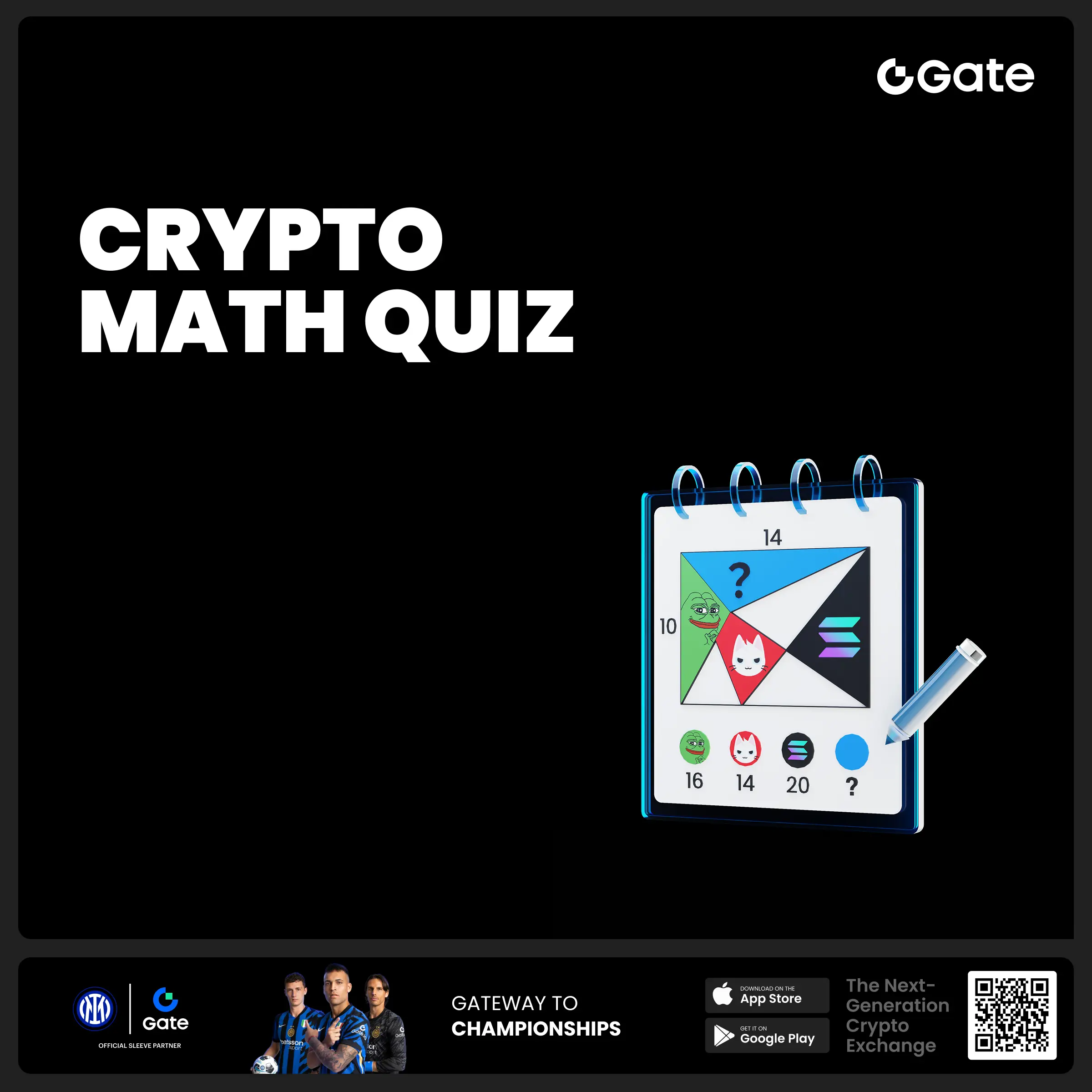

- 🧠 #GateGiveaway# - Crypto Math Challenge!

💰 $10 Futures Voucher * 4 winners

To join:

1️⃣ Follow Gate_Square

2️⃣ Like this post

3️⃣ Drop your answer in the comments

📅 Ends at 4:00 AM July 22 (UTC)

- 🎉 [Gate 30 Million Milestone] Share Your Gate Moment & Win Exclusive Gifts!

Gate has surpassed 30M users worldwide — not just a number, but a journey we've built together.

Remember the thrill of opening your first account, or the Gate merch that’s been part of your daily life?

📸 Join the #MyGateMoment# campaign!

Share your story on Gate Square, and embrace the next 30 million together!

✅ How to Participate:

1️⃣ Post a photo or video with Gate elements

2️⃣ Add #MyGateMoment# and share your story, wishes, or thoughts

3️⃣ Share your post on Twitter (X) — top 10 views will get extra rewards!

👉

Manus surpasses the same-layer model, triggering a debate on the development path of AI

Manus achieves GAIA benchmark SOTA results, sparking discussions on AI development paths

Manus has demonstrated outstanding performance in the GAIA Benchmark, surpassing other models of the same tier. This means it can independently handle complex tasks, such as multinational business negotiations, involving contract term analysis, strategy formulation, and proposal generation across multiple stages. The advantages of Manus lie in its dynamic goal decomposition, cross-modal reasoning, and memory-enhanced learning capabilities. It can break down large tasks into hundreds of executable subtasks, simultaneously handle various types of data, and continuously improve decision-making efficiency and reduce error rates through reinforcement learning.

The breakthrough of Manus has once again sparked discussions in the field of artificial intelligence about the future development path: should it lean towards dominance of General Artificial Intelligence (AGI), or should it favor collaborative dominance of Multi-Agent Systems (MAS)?

The design concept of Manus encompasses two possibilities:

AGI Path: Continuously improving the level of individual intelligence to approach human comprehensive decision-making ability.

MAS Path: As a super coordinator, directing numerous vertical domain agents to work collaboratively.

This discussion actually touches on the core issue of AI development: how to strike a balance between efficiency and safety? As individual intelligences approach AGI, the risks associated with the opacity of their decision-making processes also increase; while multi-agent collaboration can disperse risks, it may miss critical decision-making opportunities due to communication delays.

The progress of Manus also highlights the inherent risks in the development of AI. For example, in medical scenarios, Manus needs to access sensitive patient data in real-time; in financial negotiations, it may involve undisclosed information of companies. Additionally, there are issues of algorithmic bias, such as potentially unfair salary suggestions for specific groups during recruitment negotiations, or a higher rate of misjudgment of terms in emerging industries during legal contract reviews. Another potential risk is adversarial attacks, where hackers may interfere with Manus's judgment in negotiations by implanting specific sound signals.

These challenges highlight a key issue: the smarter the AI system, the broader its potential attack surface.

In the Web3 space, security has always been a core concern. Based on this concept, various encryption methods have emerged:

Zero Trust Security Model: Emphasizes strict authentication and authorization for every access request.

Decentralized Identity (DID): It has implemented a new decentralized digital identity model.

Fully Homomorphic Encryption (FHE): Allows computation on encrypted data without decrypting it.

Among them, fully homomorphic encryption is regarded as a powerful tool for addressing security issues in the AI era. It allows computations to be performed on encrypted data, providing new possibilities for privacy protection.

In addressing AI safety challenges, FHE can play a role on multiple levels:

Data layer: All information input by users is processed in an encrypted state, and even the AI system itself cannot decrypt the original data.

Algorithm level: Achieve "encrypted model training" through FHE to ensure that the AI decision-making process is not exposed.

Collaborative level: Communication between multiple agents uses threshold encryption to prevent a single point of leakage from leading to global data exposure.

Although Web3 security technology may currently seem distant from the average user, its importance cannot be ignored. In this challenging field, only by continuously strengthening defenses can one avoid becoming a potential victim.

As AI technology gradually approaches human intelligence levels, non-traditional defense systems are becoming increasingly important. FHE not only addresses current security issues but also lays the groundwork for the future of a strong AI era. On the road to AGI, FHE has shifted from being an option to a necessity for survival.